Concurrency Doesn’t Mean Parallelism

I might be stating the obvious… but bear in mind, I don’t come from a traditional computer science background. I spent my early 20s teaching high school students computer programming, my late 20s fixing computers, and only got into software development in my 30s.

I might be stating the obvious… but bear in mind, I don’t come from a traditional computer science background. I spent my early 20s teaching high school students computer programming, my late 20s fixing computers, and only got into software development in my 30s.

I’ve heard the term concurrency thrown around and seen it mistakenly translated as parallelism.

While technically, parallelism is a form of concurrency, concurrency can take on several forms, several of which do not achieve true parallelism.

Caveat, I have never been a bartender, but in this post, I’m going to use several examples that revolve around a bartender pouring drinks, and somehow find a way to tie everything back to Ruby on Rails.

What is Concurrency?

Concurrency is loosely defined as the ability for different aspects of a program to be executed at the same time.

Looking at this definition of Concurrency, one may surmise that many things are happening all at once, but that would be a false assumption.

Concurrency cann entail a rapid switching between tasks, providing the illusion that everything is occurring all at once. Let’s look at one example of concurrency.

The Bartender Example

Think about a bartender (let’s call him John) making two drinks: an Old Fashioned for you, and a Margarita for your friend.

Think about a bartender (let’s call him John) making two drinks: an Old Fashioned for you, and a Margarita for your friend.

It might look something like this…

1. John starts the Old Fashioned

2. He puts a sugar cube in a glass

3. John switches to the Margarita

4. John salts the rim of your friend's glass

5. John adds bitters to the Old Fashioned

6. John pours tequila into the Margarita

7. John adds lime juice to the Margarita

8. John starts muddling the Old Fashioned

9. Now it's time to find Triple Sec...

We can think of this as Concurrency. John is making two drinks at the same time, but in this instance, he is truly only working on a single drink at a single moment in time.

Single Threading vs. Multithreading

This is a good time to distinguish between single threading and multithreading. Both are specific types of concurrency but they differ in their processes. Let’s revisit the bartender example above.

Let’s revisit the bartender example above.

We should think of this example as single threading.

Now Multi-Threading…

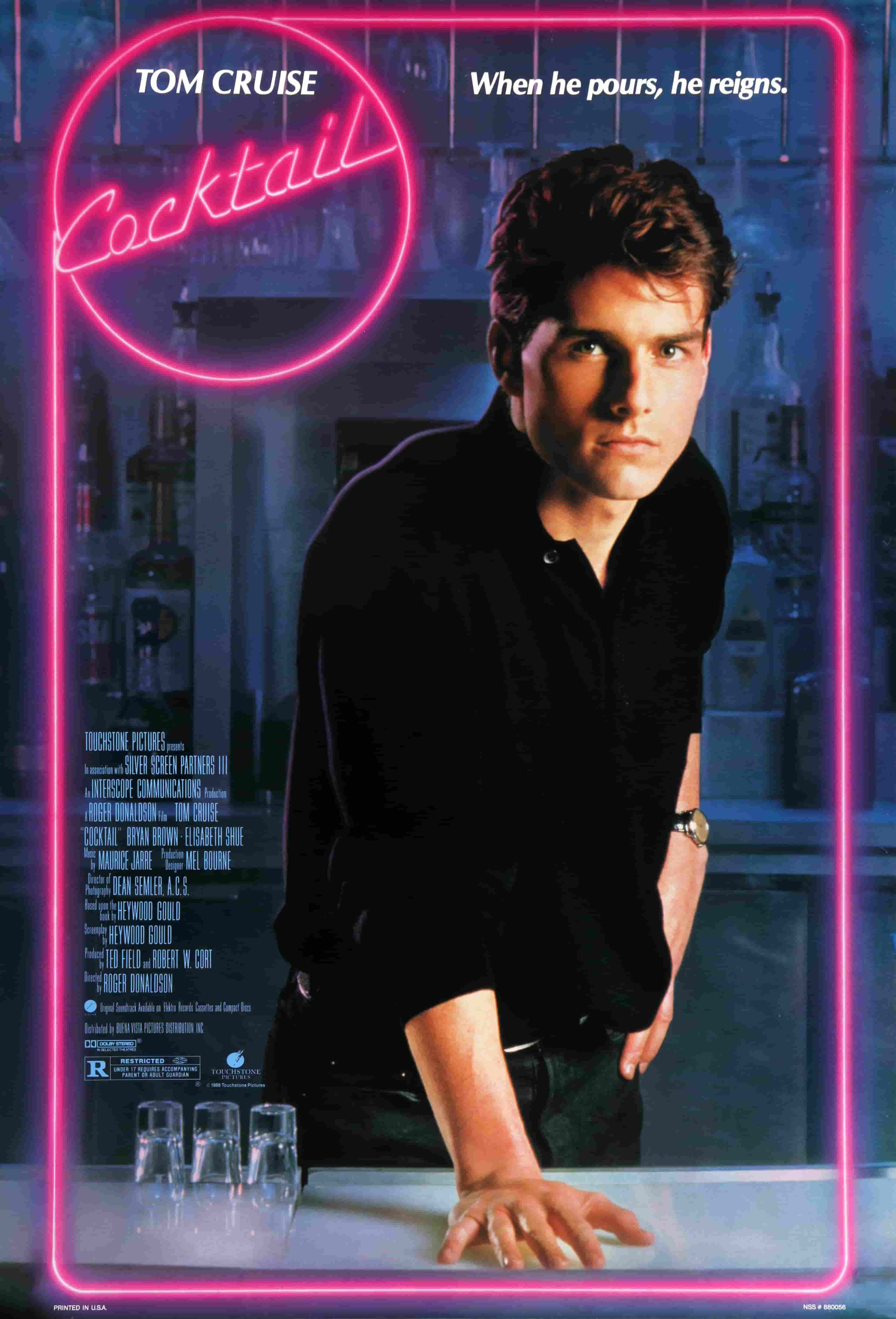

Imagine the bartender, John, is stylish, has hair, and is actually Tom Cruise from Cocktail.

John now uses both hands to mix you and your friends drink at once. Patrons cheer at his coordination and sophistication.

John now uses both hands to mix you and your friends drink at once. Patrons cheer at his coordination and sophistication.

In his right hand, John is muddling sugar and bitters for your Old Fashioned.

In his left hand, he’s shaking your friend’s Margarita.

The task is still to mix two drinks, but in this case, John is using both hands (threads) to move both drinks forward.

We should think of this approach as multi-threading.

What is Parallelism then?

Glad you asked because I was starting to ramble. Parallelism is another type of concurrency.

It is not the same as single or multithreading. It entails using multiple processors to handle distinct parts of a task. It’s often called “parallel-processing”.

Parallelism, in the computing sense, is when we have two distinct processes running at the exact same time, being handled by distinct processors.

Imagine John makes your Old Fashioned, while another bartender (let’s call him Moe) makes your friend’s Margarita. Two distinct operations, two distinct cores (bartenders).

Why does any of this matter?

It matters because your choice can have serious implications on your app’s performance.

Let’s look at an extremely contrived example of an order processor accepting credit card payment, running on a single core server.

This order processor requires 3 sequential steps to complete: card validation, card charge, receipt.

With single threading, and two orders being processed, it might look something like this:

1. Order 1 starts processing...

2. Card 1 is validated

3. Card 1 is charged

4. Receipt 1 is sent

5. Order 2 starts processing...

6. Card 2 is validated

7. Card 2 is charged

8. Receipt 2 is sent

With multi threading, and two orders being processed, it might look something like this:

1a. Order 1 starts processing

1b. Order 2 starts processing on a different thread

......

2a. Card for Order 1 is validated

2b. Server switches to validate Card for Order 2

......

3a. Card for Order 1 is charged

3b. Server switches to charge Card for Order 2

......

4a. Receipt is sent for Order 1

4b. Server switches to send receipt for Order 2

In multi-threading, threads share memory. Just like the bartender with two hands, using his brain to coordinate between mixing drinks. This can impact performance.

Now, let’s look at parallelism. It is important to note, parallelism requires multiple cores to achieve true parallel execution, so the contrived single-core server example is simply not applicable here.

What would parallelism look like with a multi-core processor?

1. Order 1 processes on Processor1, Order 2 on Processor2

2. Cards are validated on respective Order Processors

3. Cards are charged on respective Order Processors

4. Receipts are sent via respective Order Processors

At no time, do Processor1 or Processor2 interact because they are running on different cores.

It is important to note, this form of parallelism can be more memory intensive than multi-threading, depending on its use. I’ll cover more on this in Part 2 of this series.

Tying It Back to Rails

When building Ruby on Rails applications, you may be limited by a multitude of factors while trying to maximize your app’s performance.

You may not have an adequate amount of CPU cores running on your server. Or you may not have enough servers to support the code in the way you wrote it. You may run into race conditions (one thread changes an object that another thread is using).

Simply deciding to use parallelism without considering everything holistically could to lead to disaster in form of inadequate memory.

Or writing multi-threading code without considering race conditions, could lead to bugs.

It is critical to understand concurrency and the different types of concurrency as well as the syntax and libraries at your disposal to make the most informed choice about how to handle it in your application.

In the second part of this series, I will provide code examples from Rails that utilize the concepts discussed above while also touching on built-in Rails tools that can help prevent things like race conditions or memory leaks. I’ll also introduce a concurrency feature in Rails that won’t solve all your problems, but may help.